FEATURE PRIORITIZATION

COMPANY

Large-scale hiring platform

Employer facing post-integration experience

Using quantitative prioritization methods to turn feature sprawl into a defensible product strategy for engaging employers at scale.

THE PROBLEM

After a major platform integration, teams had access to new technical capabilities but lacked a clear, evidence-based reason for employers to spend sustained time on the platform. Multiple feature ideas were under consideration, but there was no shared understanding of which would actually earn trust, change behavior, or justify investment.

Debate centered on preferences and assumptions rather than a common decision framework.

THE SOLUTION

After a major platform integration, teams had access to new technical capabilities but lacked a clear, evidence-based reason for employers to spend sustained time on the platform. Multiple feature ideas were under consideration, but there was no shared understanding of which would actually earn trust, change behavior, or justify investment.

Debate centered on preferences and assumptions rather than a common decision framework.

CLIENT

Enterprise product organization

INDUSTRY

HR Technology / Marketplace Platforms

TOOLS

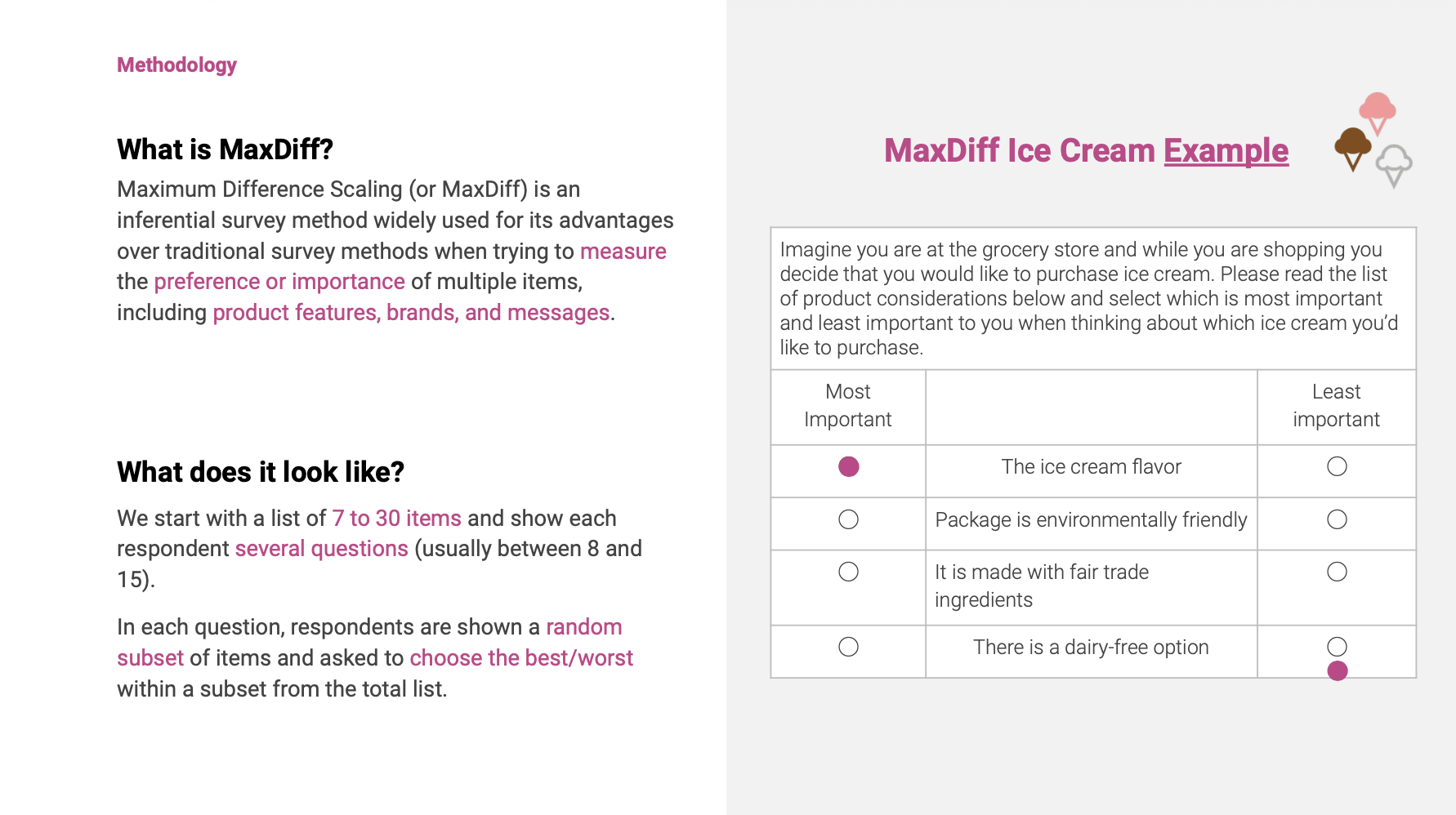

MaxDiff (Maximum Difference Scaling)

TURF analysis

Survey research

Quantitative synthesis

Executive readouts

ROLE

UX Research Lead

DURATION

Multi-month engagement

When teams argue about features, they’re usually arguing without a shared map. This work provided one.

When teams debate features, they’re often debating without a shared understanding of value, reach, or tradeoffs. This work began by slowing the conversation down long enough to make those dimensions explicit. Rather than treating prioritization as a matter of opinion or intuition, the research created a common map that allowed product, research, and leadership to see the same landscape, weigh decisions against the same evidence, and move forward without re-litigating assumptions. What follows isn’t a story about finding the “right” feature, but about designing clarity into decision-making so strategy can actually hold under pressure.

INVESTIGATE

Before articulating hypotheses, I began with an investigative phase focused on understanding how employers were actually experiencing indexed jobs following a major platform change. Instead of starting from feature ideas or future-state concepts, I worked backward from existing signals like prior research, internal narratives, and lived employer practice to surface tensions, inconsistencies, and open questions. The goal at this stage wasn’t to define solutions, but to build enough shared understanding to name the claims that would later be tested.

Retrospective synthesis: I reviewed existing research, strategy artifacts, and materials related to the platform transition to understand what was already known, what had been assumed, and where prior work left unresolved questions. This helped distinguish between settled decisions and inherited narratives—avoiding re-litigation while exposing gaps that needed fresh inquiry.

Stakeholder sensemaking: I facilitated conversations with cross-functional partners to surface how different teams understood employer needs, post-transition value, and the role of indexed jobs going forward. These discussions made implicit beliefs explicit—revealing where teams were aligned, where stories diverged, and where confidence exceeded evidence. The aim wasn’t consensus, but a clearer map of assumptions worth examining.

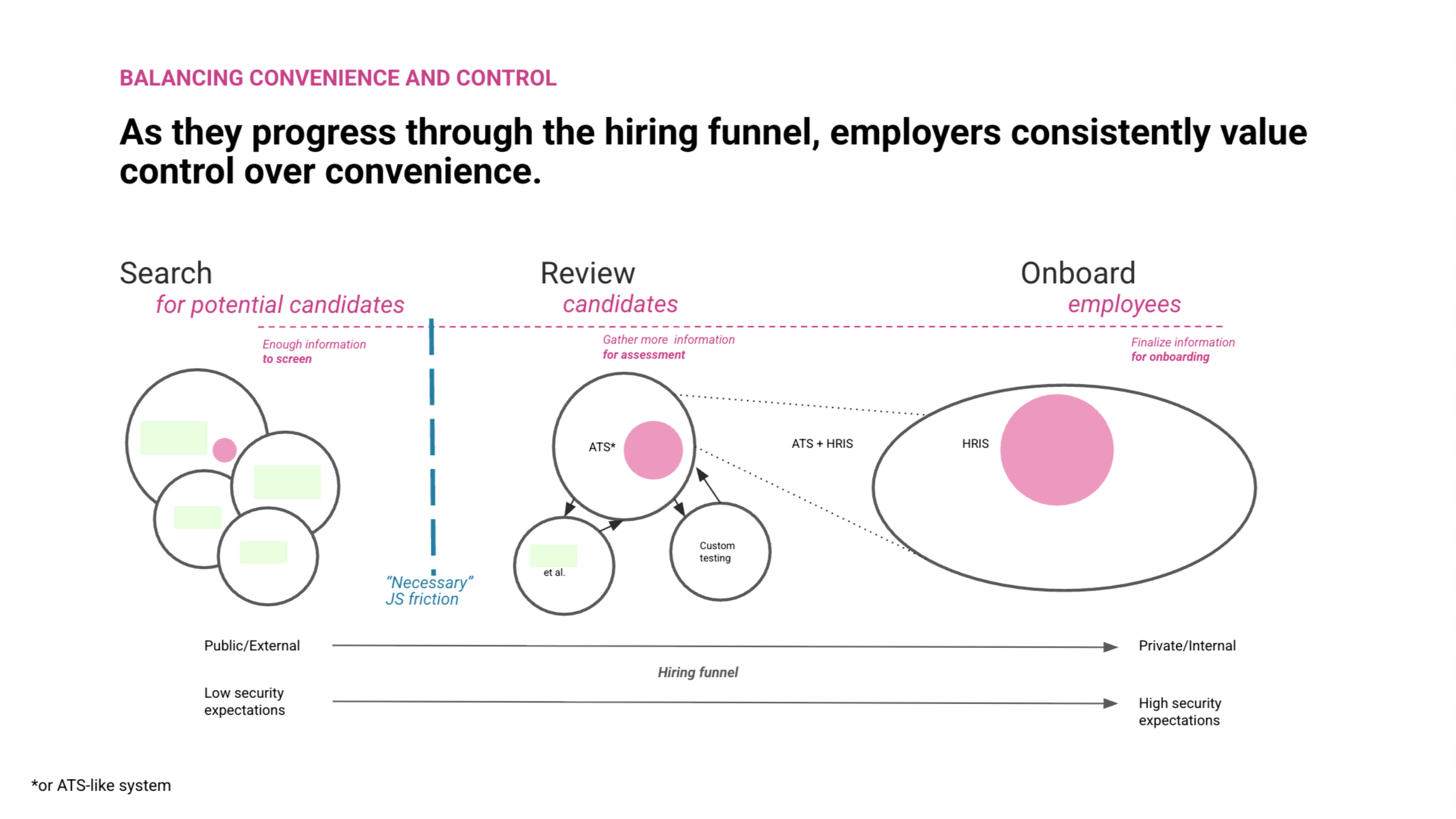

Generative employer interviews: I conducted in-depth interviews with employers managing indexed jobs to understand real hiring practices across tools, roles, and organizational contexts. These conversations focused on how employers made decisions, coordinated internally, and judged progress—surfacing tradeoffs between convenience, control, trust, and effort. Rather than validating concepts, the interviews provided grounded material for articulating testable claims about value and behavior.

FRAME

Before discussing features, this work began by articulating the underlying claims the organization was already making about what employers value, how they decide where to invest time, and what kinds of evidence earn trust. The statements below represent those claims in their clearest form. They functioned as framing artifacts: a shared set of hypotheses that made the logic behind proposed capabilities explicit and testable. The feature space was then treated as material to be cut from these hypotheses, not the source of them. What followed was a process of examining which claims held up through qualitative inquiry and which warranted rigorous quantitative stress-testing.

1. Employers decide where to invest time based on evidence of hiring progress, not on access to tools or features.

2. Proof of outcomes is a primary driver of employer trust.

3. Early signals about match quality meaningfully influence continued engagement.

4. Employers are more likely to act when recommendations are embedded directly into their workflow.

5. Integration across related tools is experienced as value only when it reduces duplicated effort or uncertainty.

6. Fragmented hiring data is interpreted as a signal of platform immaturity.

7. Behavioral signals are only useful when they reduce decision effort at the moment of action.

8. Employers prefer fewer, clearly connected capabilities.

9. Foundational capabilities enable value but don’t motivate engagement alone.

10. Effort must be visibly connected to outcomes over time.

11. Visibility into past interactions increases confidence in outreach.

12. Reminders influence follow-through only when trust exists.

13. New behaviors are adopted when framed as extensions of existing practice.

14. Sustained use depends on consistent proof of value.

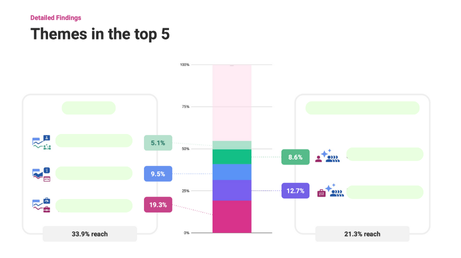

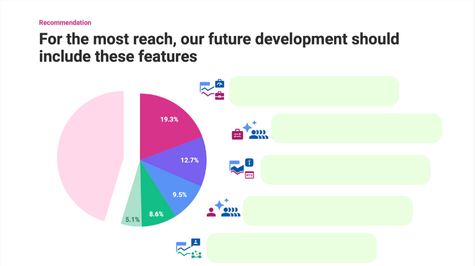

In this phase, targeting meant narrowing attention across five distinct value dimensions within the Indexed Connections surface. Each dimension represented a different way employers might define value, and the work focused on understanding how strongly each held up when employers were forced to make tradeoffs.

TARGET

PLATFORM INTEGRITY

Targeting began by isolating baseline platform integrity as its own dimension of value. This category captured capabilities related to system consistency, transparency, and reliability, allowing the work to assess whether employers actively prioritize confidence in the platform itself when evaluating where to invest attention.

JOB EFFECTIVENESS

This dimension targeted job-level effectiveness as a discrete signal of value. By separating job effectiveness from broader reporting or insight, the work focused on whether employers orient first around evidence that individual jobs are progressing and producing meaningful results.

PERFORMACE VISIBILITY

This dimension targeted early-stage decision support. By grouping capabilities related to candidate assessment, the work focused attention on whether employers prioritize help evaluating fit and deciding who to engage before outcomes are visible.

CANDIDATE ASSESSMENT

Behavioral Signals targeted higher-order understanding of candidate activity and intent. Separating these capabilities made it possible to test whether insight-driven value stands on its own, or whether its usefulness depends on being paired with clearer evidence of effectiveness or progress.

BEHAVORIAL SIGNALS

Behavioral Signals targeted higher-order understanding of candidate activity and intent. Separating these capabilities made it possible to test whether insight-driven value stands on its own, or whether its usefulness depends on being paired with clearer evidence of effectiveness or progress.

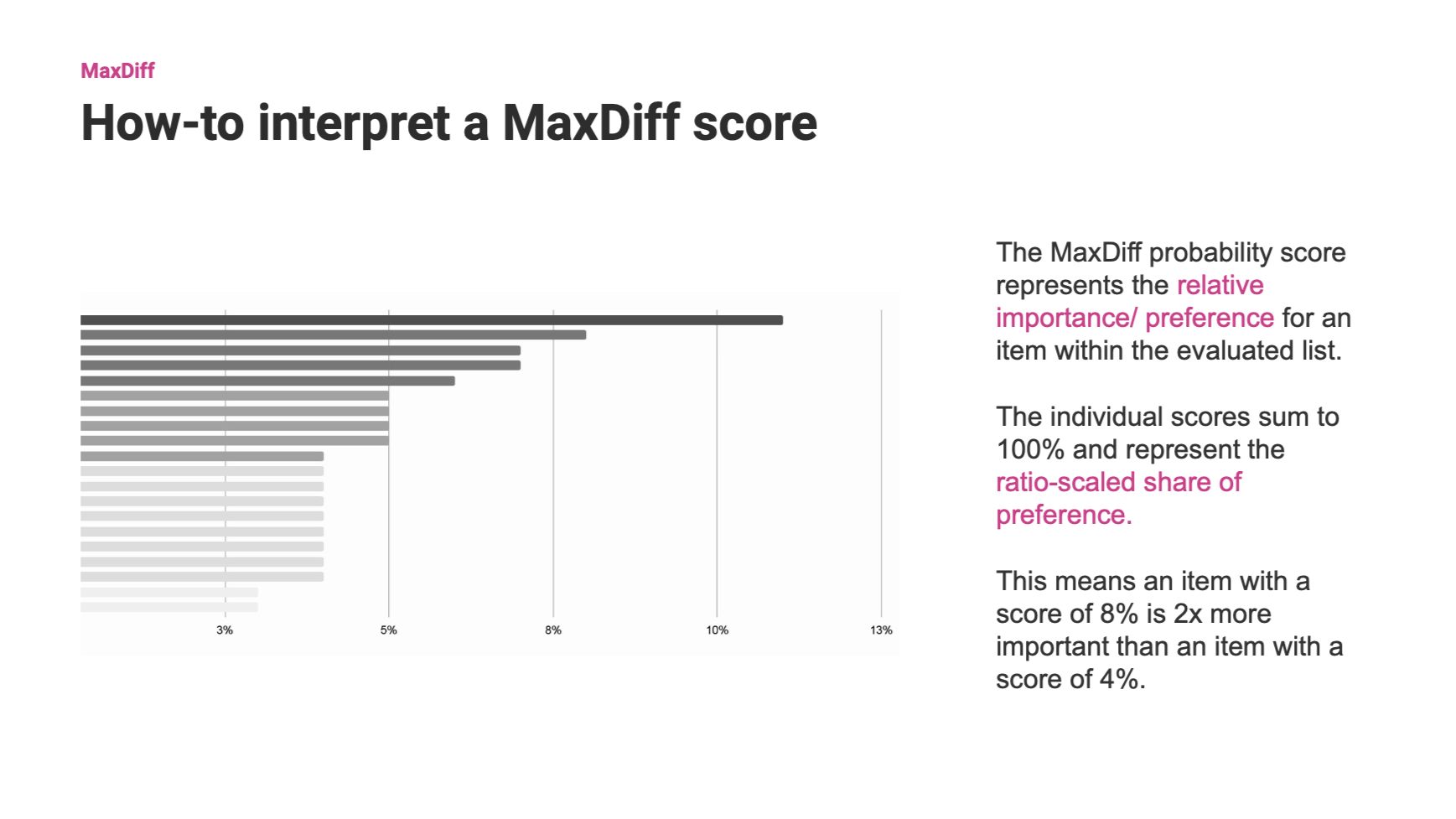

A closer look at support tools for communicating methodological complexity

GROUP SIGNALS INTO A COHERENT VALUE STRUCTURE

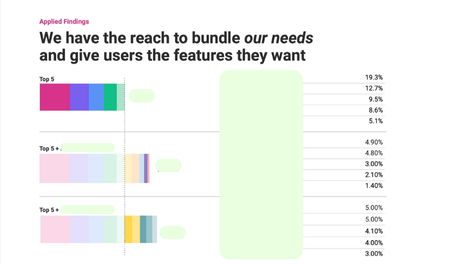

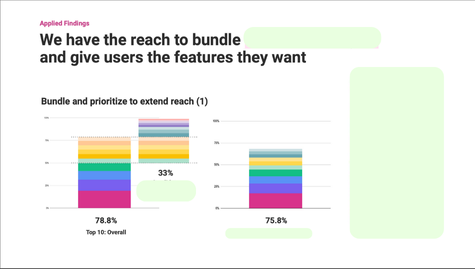

Once relative importance across categories was visible, the work focused on organizing those signals into a structure that made relationships legible. Rather than treating results as a ranked list, this step examined how different value dimensions related to one another. This clarified which signals reinforced each other, which competed, and which functioned primarily as enablers. This organization transformed raw prioritization into a shared understanding of how value coheres across the surface.

DISTINGUISH ENABLING VALUE FROM MOTIVATING VALUE

The organizing phase also separated capabilities that establish confidence from those that actively drive engagement. This distinction helped clarify why some dimensions mattered early, while others only became meaningful once trust or momentum existed. By making this difference explicit, the work prevented foundational needs from being misread as low priority and ensured that higher-order value was interpreted in the right context.

ORGANIZE

A closer look at [redacted] data visualizations

Re-anchor in-flight work to shared evidence

As an addendum, the findings were applied back to work already in progress across multiple teams. Rather than redirecting efforts, this step used the organized results as a common reference point helping teams see how their existing initiatives related to the same underlying value signals. This created a shared language for discussing tradeoffs, reduced fragmentation across parallel efforts, and allowed teams to connect their work to one another through evidence rather than alignment exercises.

SOCIALIZING FINDINGS

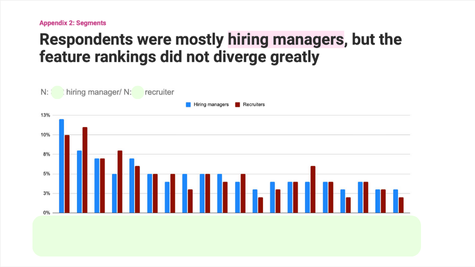

Rather than delivering results once and moving on, the work entered an active phase of sharing and discussion across teams. Findings were presented in multiple forums and adapted to different audiences, creating space for questions, disagreement, and interpretation. This approach treated research as a shared resource rather than a static readout.

CONNECTING TISSUES

Across conversations, the findings were used to connect parallel efforts and surface shared tradeoffs, rather than to issue directives. By engaging openly with uncertainty and inviting others into the reasoning behind the results, the work enabled teams to relate their decisions to the same evidence base even when they ultimately made different choices.

ADRESSING CHALLENGERS

Particular attention was given to teams whose in-flight work or assumptions were most destabilized by the results. Instead of smoothing over tension or reframing findings to preserve alignment, the work made room for exploration and guided teams to understand how the evidence intersected with their goals, constraints, and responsibilities. This allowed disagreement to surface productively rather than fragmenting trust.

ACTION

The work scaled through reuse rather than rollout. The framing, value categories, and tradeoff language continued to serve as reference points as teams discussed in-flight work and explored new ideas. Because the research focused on underlying value rather than specific solutions, it remained applicable as context shifted.

The findings were also shared externally at the company’s international product showcase. Presenting the work in this setting tested whether the thinking held beyond its original audience and reinforced its usefulness as a way to talk about hiring value, trust, and decision-making at a broader level.

Rather than closing the work, scale marked the point where the research became part of how conversations continued supporting alignment, disagreement, and decision-making without needing to be reintroduced.

SCALE

Holli Downs PhD

UX Researcher

See more of my work: